When organizations adopt artificial intelligence, much of the attention goes to the technology: algorithms, compute power, data pipelines, and model accuracy. These are important, but they are not the whole story. In many cases, the bigger risks come not from the technology itself but from the business use cases—the “why” behind the AI.

The Business Use Case Defines the Risk

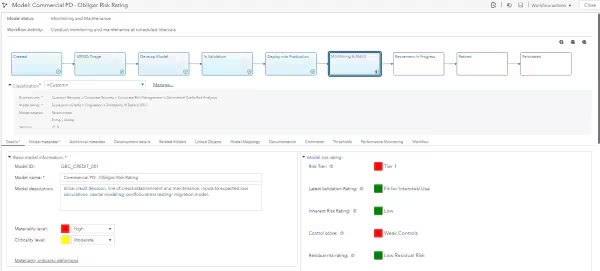

The same model that classifies documents could be deployed to automate loan approvals or to filter job candidates. The underlying technology may not change, but the ethical and regulatory stakes shift dramatically depending on the use case. For financial services, fairness and bias in credit decisions are paramount. In healthcare, the accuracy of AI-driven diagnostics can become a matter of life and death. And in HR, transparency and accountability are central to ensuring equity in hiring.

By focusing only on technical performance, organizations risk overlooking the fundamental question: Is this an appropriate use of AI for this business context?

Ethics and the “Why”

Ethics is not a bolt-on consideration for AI—it is integral to the decision of why a model is being deployed in the first place. For example:

- If AI is used to maximize customer engagement at all costs, it may drive business value while undermining consumer trust through manipulative personalization.

- If it is used in safety-critical systems without clear governance, ethical failures can cascade into societal harms.

- If the use case is justified primarily by efficiency, leaders must weigh whether cost savings are worth potential risks to fairness, privacy, or human dignity.

This is where principled decision-making becomes critical. The choice of use case determines whether the ethical risks are manageable—or unacceptable.

The Interaction Between Use Case and Ethics

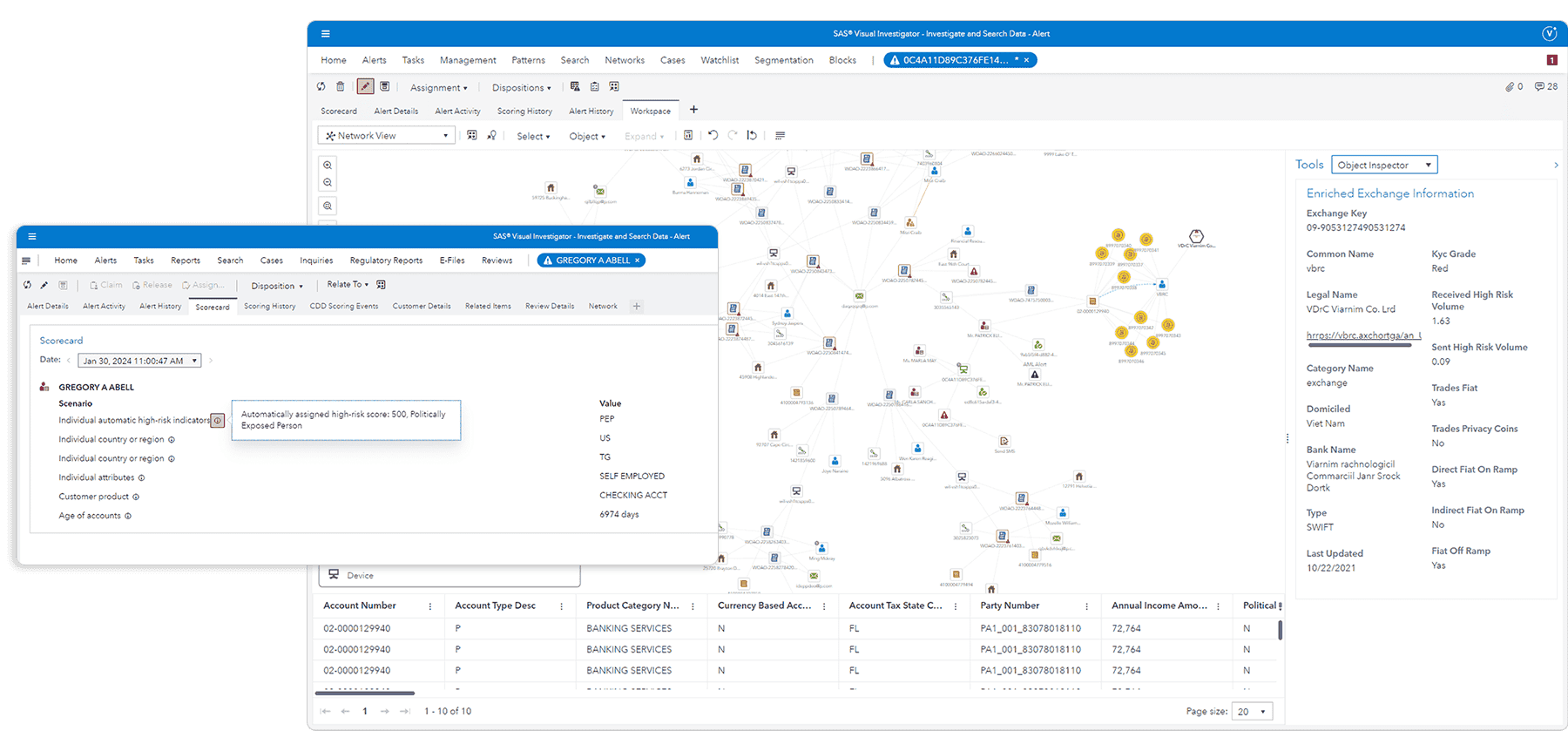

Ethics and use case are inseparable. An AI system for fraud detection will naturally raise questions about false positives, due process, and explainability. An AI system for creative content generation raises different concerns about intellectual property and authenticity. Both use cases involve risks, but the ethical frameworks that apply differ dramatically.

Organizations that treat ethics as a universal checklist will miss this nuance. What’s needed is a risk lens that adapts to the use case. This means evaluating:

- Impact on stakeholders: Who is affected and how?

- Regulatory obligations: What laws and standards apply in this domain?

- Societal expectations: Does the use align with norms of fairness, safety, and accountability?

Why Leaders Must Start With the “Why”

The most responsible AI organizations don’t just ask whether a model can be built or whether it performs well technically. They start by asking whether it should be built at all.

By elevating the business use case above the technology, leaders make space for ethical reflection and responsible governance. This is where sustainable advantage lies—not in the fastest model, but in the most trustworthy one.

.webp)

-1.jpg)

.jpg)

.jpeg)