Large Language Models (LLMs) are no longer emerging technology. They’re already embedded in everyday operations, from customer service chatbots to compliance drafting assistants. But while most conversations focus on the models themselves—accuracy, training data, or vendor choice—the real governance challenge lies elsewhere. The key is governing how LLMs are used inside the business.

Here are three things to keep in mind when designing governance for LLMs today.

1. The Use Case Defines the Risk

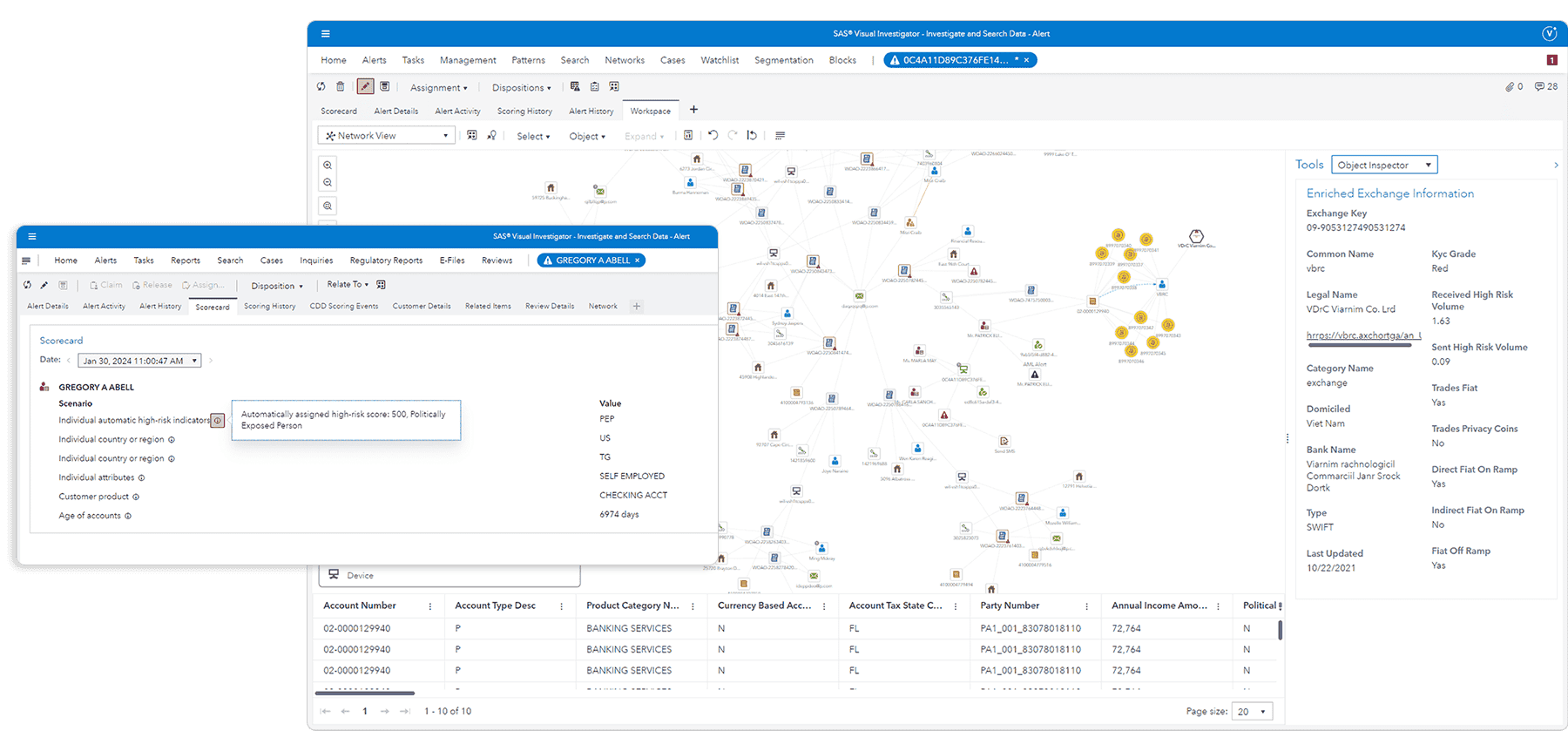

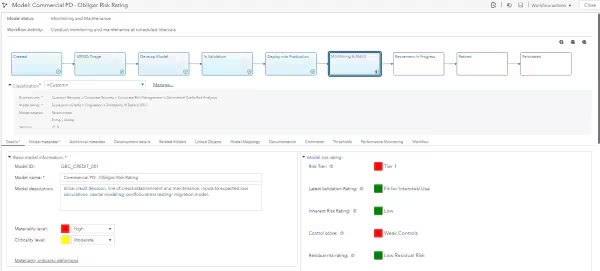

An LLM generating draft marketing content carries very different risks than an LLM generating draft regulatory disclosures. The model might be the same, but the stakes are not. Governance needs to be context-driven. That means classifying LLM use cases by their potential impact on customers, compliance, and decision-making. High-impact use cases require greater oversight, testing, and controls. In practice, this might mean lightweight monitoring for internal productivity tools, but rigorous validation and sign-off for use cases tied to financial advice, credit scoring, or regulatory filings.

2. Guardrails Matter More Than the Model

No model is perfect. Even the best LLMs will occasionally produce incorrect, biased, or misleading output. The question isn’t whether you can eliminate that risk, but how you design processes to contain it. This is where governance frameworks play their role. Human-in-the-loop review, clear approval workflows, and audit trails for LLM outputs are examples of guardrails that protect against misuse. For instance, if an LLM is used to draft customer communication, governance should require review by a compliance officer before release. The safeguard, not the model, is what keeps you aligned to standards.

3. Data Outcomes Drive Value

Too many organizations treat LLM adoption as a technology project. The smarter approach is to anchor governance in the outcomes you expect. What decision will the output inform? What data should be captured for audit? What metrics define whether the LLM is performing acceptably in its role? A compliance chatbot, for example, should not just be measured on response speed but also on whether it consistently provides guidance aligned with internal policy. By focusing on outcomes, governance turns from a checklist into a strategy that ensures LLMs add real business value.

At SureStep, our view is that governing LLMs is not about chasing every new release or fine-tuning every parameter. It’s about understanding the business context, designing guardrails, and aligning data outcomes to strategic goals. Just as with broader GRC, success comes from focusing less on the technology itself and more on how people, process, and governance bring it to life.

If your organization is experimenting with LLMs, a structured governance approach will determine whether they become a trusted business tool or a source of hidden risk. Now is the time to design that framework before adoption scales further.

.webp)

-1.jpg)

.jpg)

.jpeg)