As regulatory expectations evolve and transaction volumes continue to surge, the pressure on financial institutions to detect financial crime efficiently has never been greater. Artificial Intelligence (AI) promises to help, but many AML programs still find themselves stuck between pilot models and production reality. The difference between a promising algorithm and a practical capability lies not in data science alone, but in disciplined execution and governance.

Here are three practical ways to make AI deliver measurable value in your AML program.

1. Build from Trusted Data, Not Just Available Data

AI cannot compensate for poor data hygiene. Most AML ecosystems remain fragmented, with customer, transactional, and sanctions data often residing in disconnected silos, captured at varying levels of completeness and timeliness. Before deploying any AI component, focus on establishing data lineage, standardization, and quality monitoring.

Consistent, traceable data not only improves model accuracy but also strengthens defensibility when regulators review your detection logic. A model trained on reliable, explainable data will outperform even the most complex architecture built on weak inputs.

Mature programs integrate their data quality checks directly into model pipelines, ensuring every score or classification is backed by verifiable inputs.

2. Use AI to Enhance Analysts, Not Replace Them

Early-stage AI deployments often overreach by trying to replace human judgment. The most successful institutions instead use AI to augment investigator efficiency.

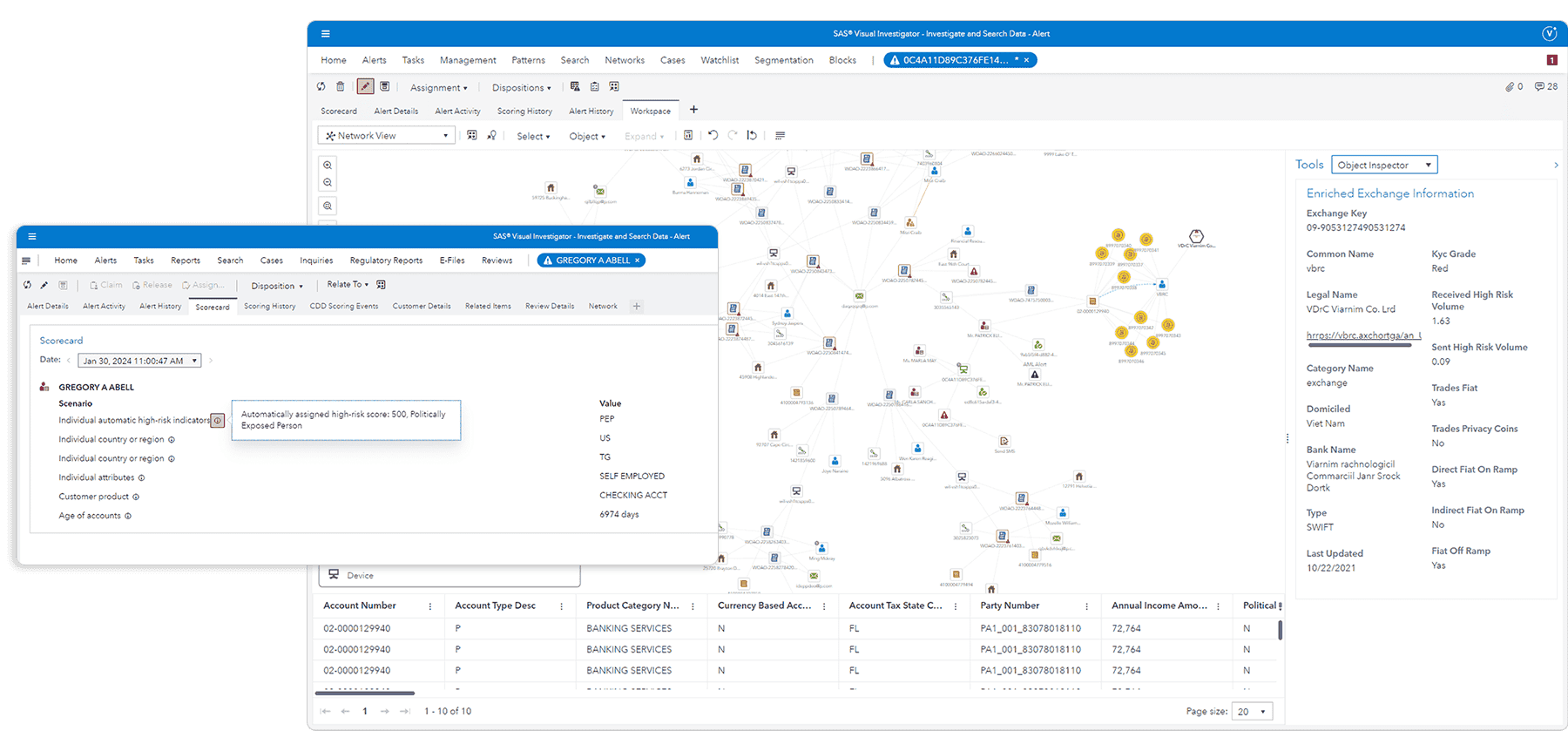

Start with lower-risk, high-volume use cases, such as clustering similar alerts, summarizing narrative text, ranking review cases, or identifying false-positive patterns. These quick wins deliver measurable ROI while introducing explainability and control.

By automating the mundane, analysts can focus on higher-order judgment and investigative depth, improving both productivity and case quality.

Start with “assistive intelligence” models that provide insights to guide human decisions, rather than models that make independent determinations.

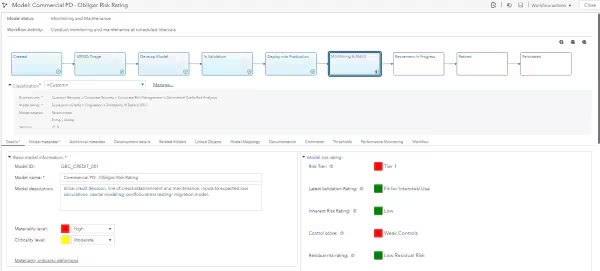

3. Embed AI into Model Governance

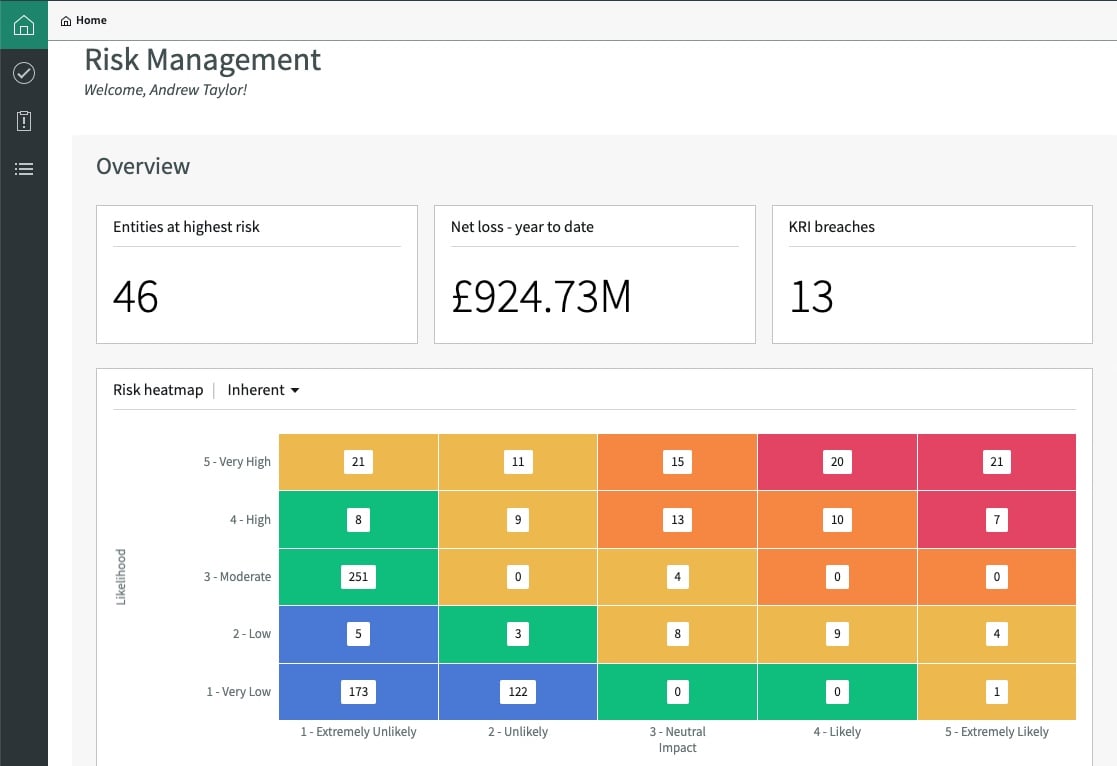

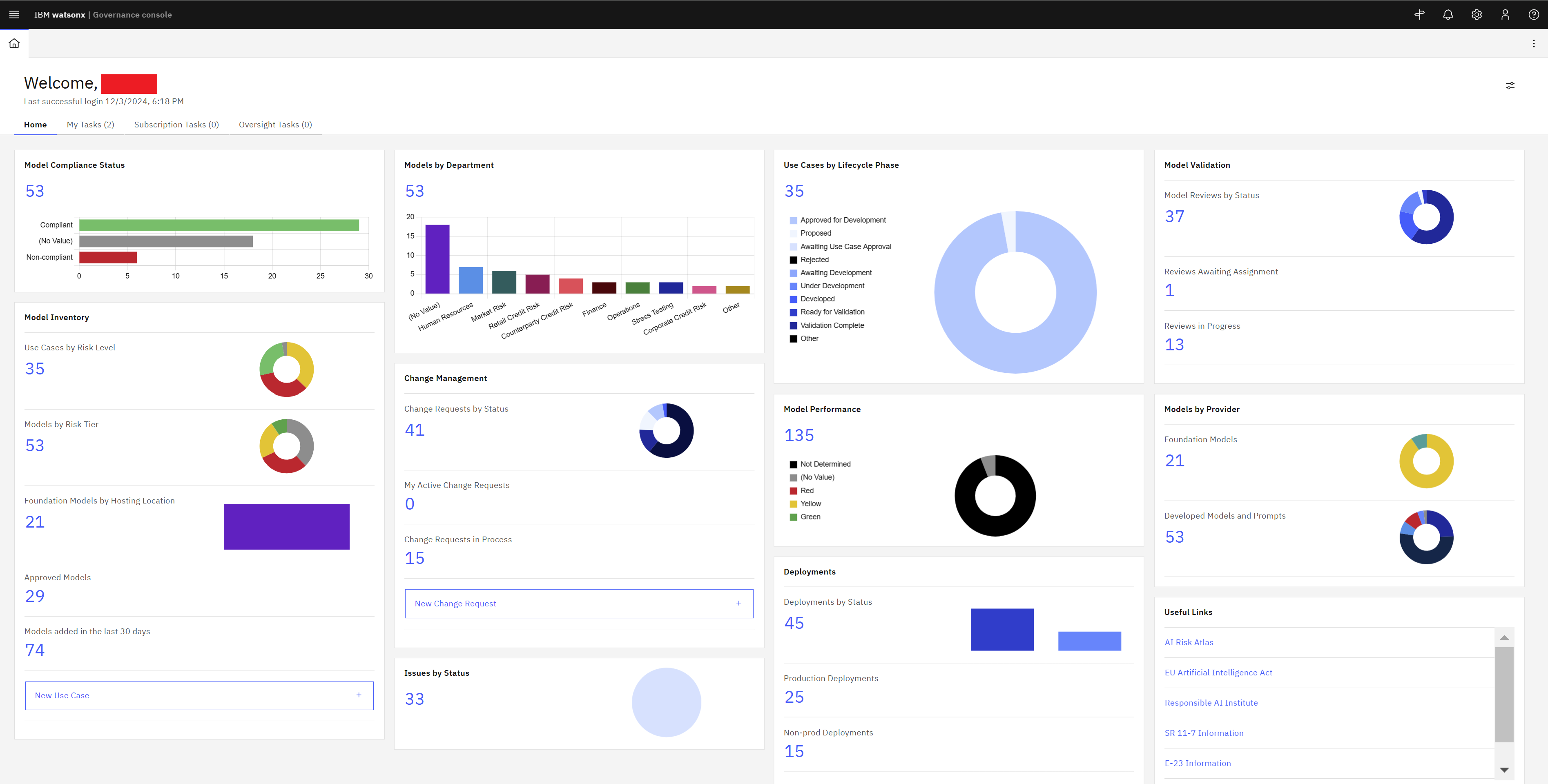

AI in AML is no longer experimental; it’s a regulated component of the model landscape. Whether through SR 11-7, OSFI E-23, or the EU AI Act, financial institutions must demonstrate traceability, accountability, and explainability for every AI model in production.

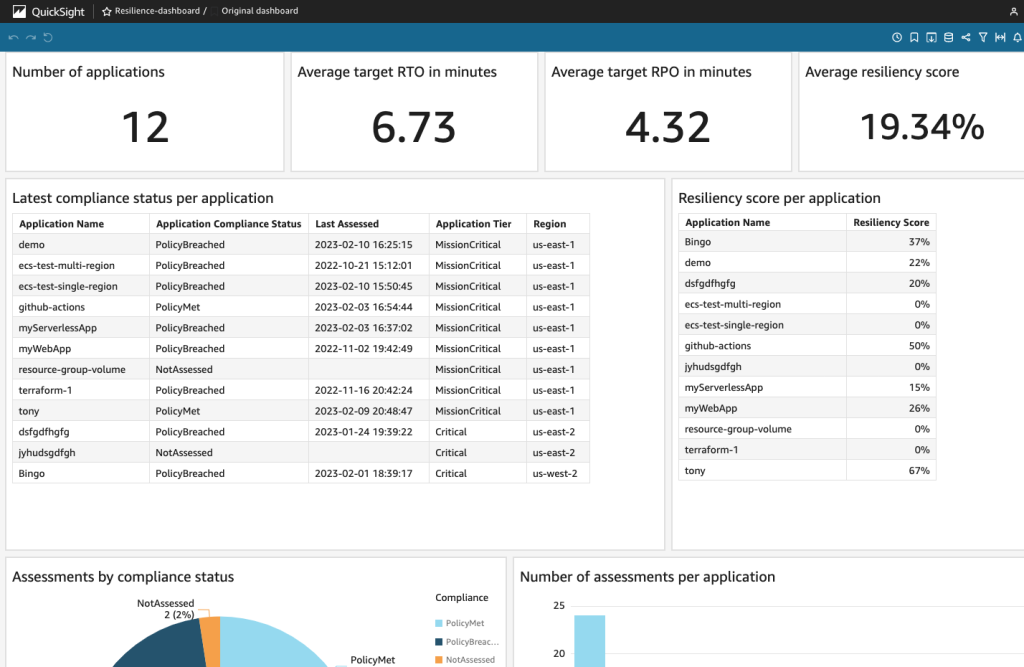

This requires integration, not isolation. AI initiatives should sit within existing Model Risk Management (MRM) frameworks, complete with version control, validation, and monitoring. Transparent, explainable outputs, especially for alert scoring or risk classification, build confidence with both regulators and internal audit.

Treat every AI model as a living control. Establish clear definitions for ownership, validation cadence, and change protocols from the outset.

Making It Real with SAS AML

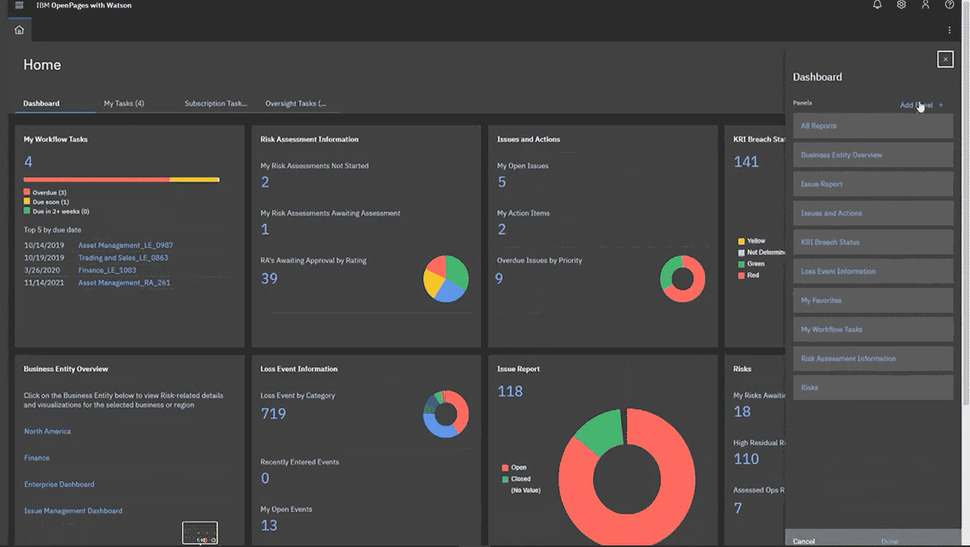

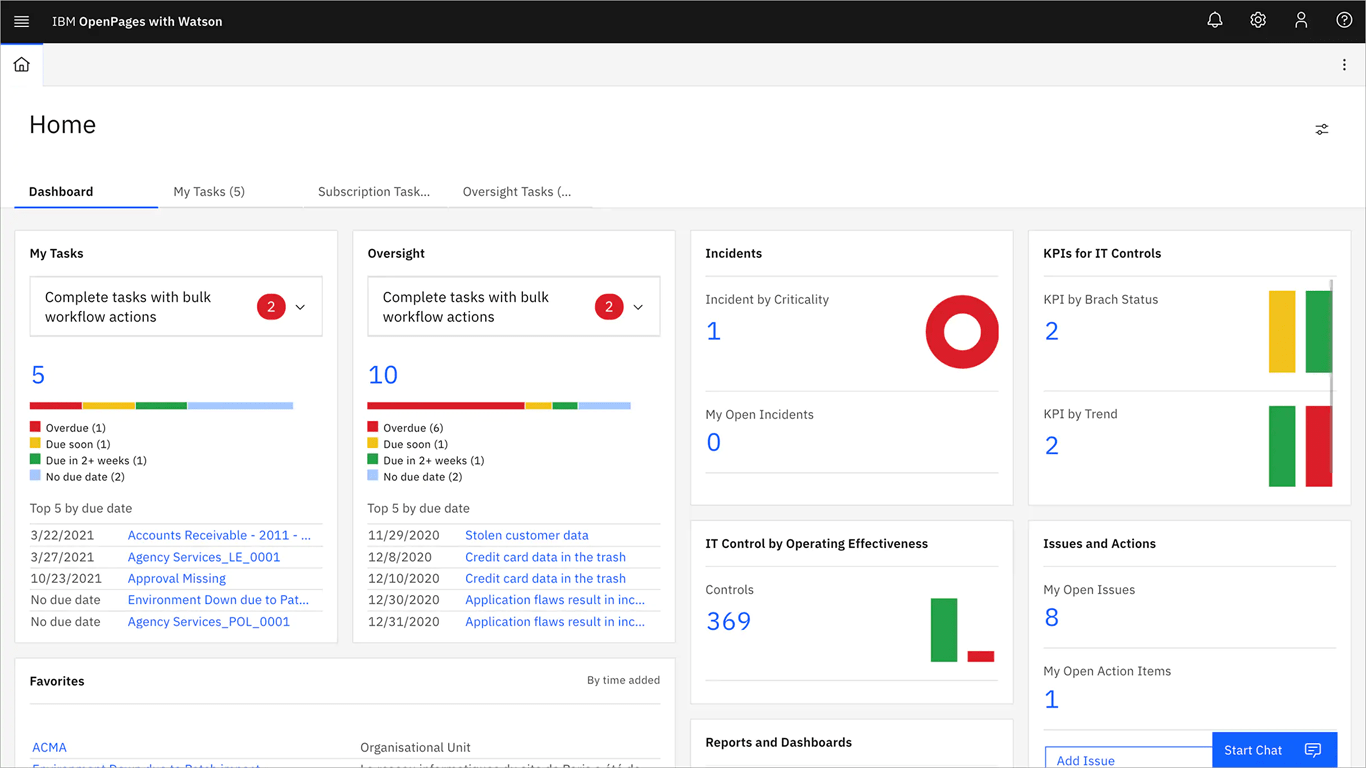

SAS Anti-Money Laundering (SAS AML) provides a mature foundation for deploying practical AI within compliant boundaries. Its AI and machine learning capabilities are embedded directly into the detection and investigation workflows, allowing institutions to apply advanced analytics while maintaining complete transparency and auditability.

Through the SAS Viya platform, models can be trained, validated, and monitored in a single environment, bridging data management, AI development, and regulatory governance. The result is a scalable, explainable approach to intelligent AML that aligns innovation with compliance.

.webp)

-1.jpg)

.jpg)

.jpeg)